In modern healthcare, AI promises faster diagnoses, better treatment plans, and more efficient clinical workflows.This inherently complex process further gravitated due to restricted data access. Hospitals cannot simply pool all their patient data into a single location. Privacy laws, ethical considerations, and sheer logistical hurdles mean that sensitive medical data stays where it is.

So how do we build powerful AI models when the data is scattered across multiple sites? The answer is federated learning, and with a simple reproducible example using open source lung cancer detection shows exactly how it works in practice.

Federated learning flips the traditional approach to AI on its head. Instead of moving data to a central server, we move the AI model to where the data lives. Each participating hospital trains the model locally, keeping patient data safely behind its own firewall. The only thing shared back is the learned model parameters, which are then securely aggregated.

This approach has several advantages:

Scaleout Systems has designed and developed a scalable federated learning framework. It works across cloud, on-premise, and even edge devices, ensuring that no matter where the data sits, it can still contribute to a shared AI effort. The framework is free for all academic and research projects. We have provided a public SaaS that can be used for a range of different use cases.

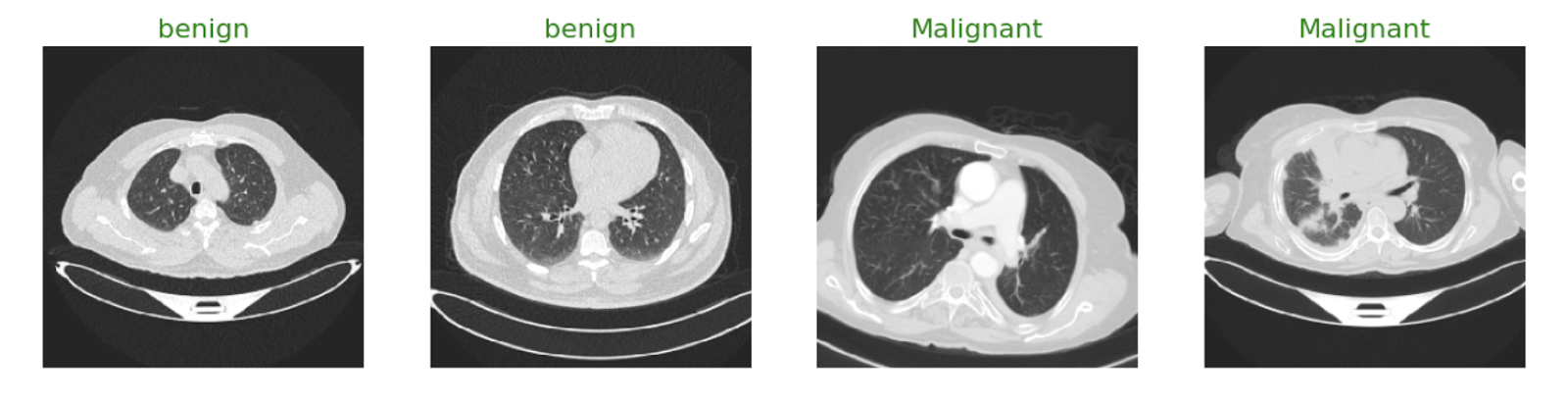

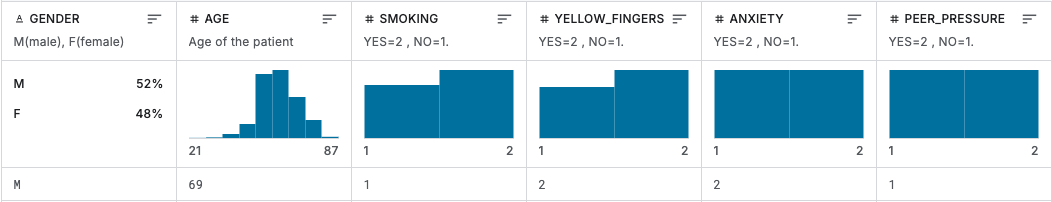

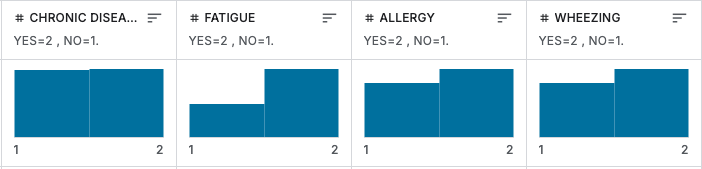

To test the power of federated learning, we built a lung cancer detection model using two open datasets:

We trained the same CNN model in three different collaborative scenarios to see how performance changed as more data sources joined the network. The CNN model and dataset details are available on the link mentioned above. The following results are based on the image dataset. If needed, one can use Tabular data with other models like SVM to reproduce similar results.

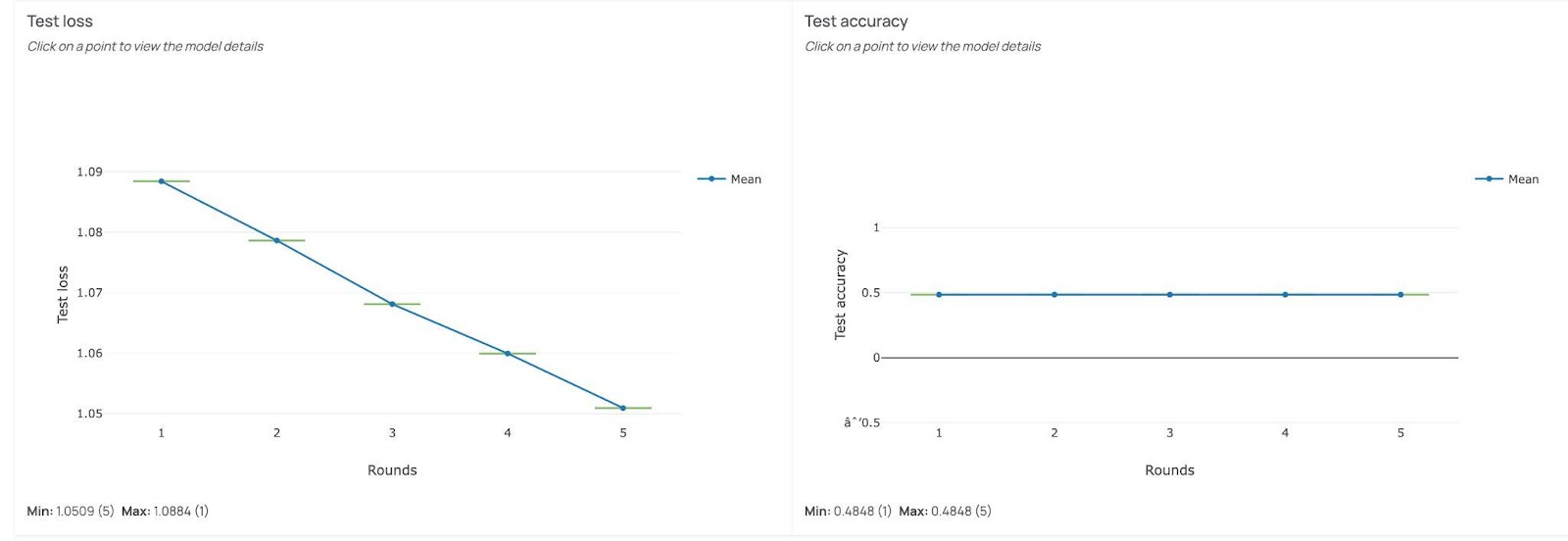

In the first setup, only one site participated. The model’s accuracy stayed around 50%, even though the training loss was marginally decreasing (y-axis). With limited and homogeneous data, the model couldn’t learn enough to make reliable predictions.

In the second setup, two sites joined forces. The diversity of the data marginally improved the accuracy. We could see the model benefiting from the broader dataset, but it still had room to grow.

Finally, with three participating sites, the model had access to the full dataset through federated learning. After 100 training rounds, accuracy soared to 98%, a level comparable to centralized training, but without moving a single patient file from its original location.

The lung cancer example is easy to understand, yet is more than an academic exercise, it demonstrates a repeatable use case. With Scaleout’s framework, hospitals, research centers, and even medical devices can join a federated learning network, each contributing to AI model training while keeping data private.

In one of our recently completed projects, three Swedish hospitals, Linköping, Lund, and Umeå, used Scaleout’s framework for 3D brain tumor segmentation. The ASSIST project is a real-world example of how federated learning can address challenging use cases. The team began with a public dataset (BraTS 2020) and then moved on to training with real, private patient scans. The results are promising: faster processing times, improved model performance, and no compromise on patient confidentiality. For further technical details and related publications, please follow this link.

For healthcare professionals, this means better tools for diagnosis and treatment, tools that can be trained using the combined intelligence of multiple hospitals without ever exposing sensitive data. For technical teams, it means a secure, scalable way to deploy machine learning across distributed environments.

Federated learning is not just a technology, it’s a bridge between institutions, enabling collaboration in places where data sharing was once impossible. And in healthcare, that bridge can mean the difference between early detection and missed opportunity.